The search box hasn’t changed shape, yet the experience around it no longer feels static. What looks like a small product tweak is really the result of a slow build finally reaching public visibility. Gemini shot up the app charts and people began speaking to Google the way they speak to another person, not a directory.

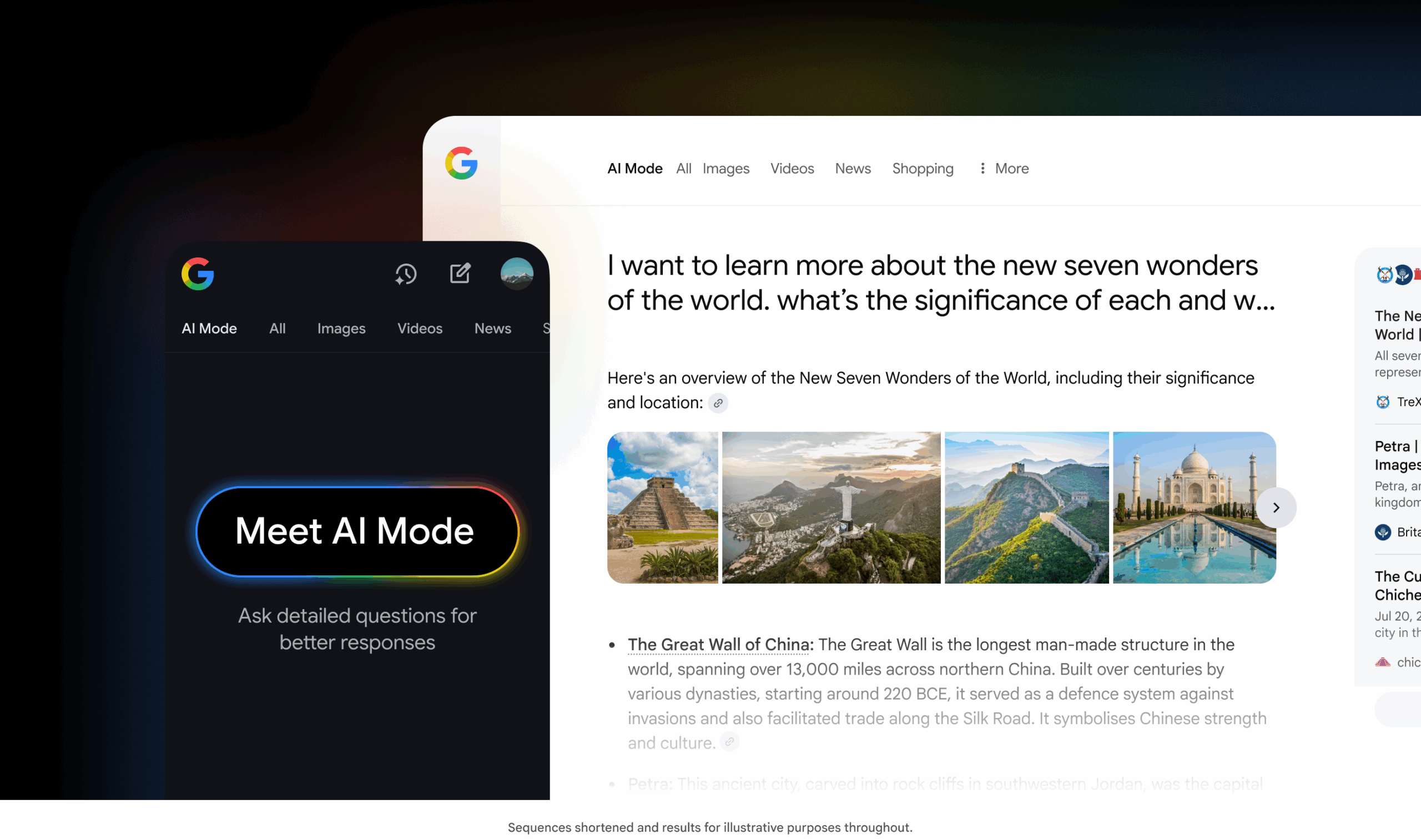

AI Mode is not a chat window bolted to a search page. It lets users ask in full sentences, follow up, and pull maps, prices, live data and images into the same thread. The design relies on large models stitched into older infrastructure but presented as a single surface rather than a handoff between tools.

A new rhythm for old habits

AI behaves like an expansion joint. People who once typed a few keywords now ask layered questions: compare prices, add context, locate something, then refine it further. Visual queries through Lens are climbing because users like taking a photo instead of describing an object. Behind the scenes, Google pulls shopping data, maps information and live signals to make the answers feel grounded. That stack is what separates Google’s approach from a generic chatbot without access to real-world data.

What AI Mode is built to solve

The system focuses on information tasks rather than creativity or productivity suites. Trip planning, comparisons, research questions, and time-sensitive factual lookups sit at its core. The model fans out multiple sub-queries, checks live sources and then assembles an answer. For creators and publishers, that matters: authority and usefulness still shape whether content surfaces inside these summaries.

The interface feels familiar until it doesn’t

Ask a messy, multi-part question and you get a coherent response without rewriting it into keyword fragments. You can keep going with clarifying prompts. You can start from a photo and continue the thread as if it were text. Even voice input has become less of a gimmick and more of a hands-free default in some settings. Children test it with homework. Drivers use it for directions. The expectation has subtly shifted from “search” to “ask”.

Friction under the surface

Source transparency is the first point of tension. Summaries are convenient, but publishers and policymakers will question how credit and traffic are handled. Another fault line sits in Google’s dominance of live data. The combination of maps, commerce information and indexed pages makes answers powerful, but also concentrates control over what people see first.

Habit change is the third tension. Once users trust the synthesis, click-through rates may fall. Google argues that links remain visible and useful, but the shift in behavior will reveal where the web’s referral structure bends or breaks.

Product lessons applied in real time

This rollout followed a familiar internal playbook: start small, test, correct, then widen access. The approach echoes earlier tech products that only took off after enough real-world usage shaped them. The design choice to leave the traditional search box intact while layering conversation on top echoes other platforms that introduced new behavior without discarding the old one.

Three plausible arcs ahead

One scenario sees search and conversational answers blending slowly. Simple queries stay terse while complex ones default to AI Mode. Another splits the experience: a quick search lane and a deeper conversational workspace. Power users drift to the latter. A third scenario involves sharper competition. Rivals may win in narrow domains with deeper trust or richer vertical data while Google leans on scale and live signals.

What signals matter now

Watch how summaries handle sourcing. If people can trace claims back to original reporting or data with minimal friction, trust will hold. Also watch user behavior in commerce and local queries. If fewer people click through, publishers, retailers and platforms will rethink distribution and revenue models.

Multimodality is another marker. Camera-based queries and live voice sessions point to a world where text search is not the only gate. That shift will influence how information is designed, verified and surfaced.

Opportunity and risk in the same frame

This transition is driven less by flawless technology and more by a change in user posture. A helpful information assistant has to balance speed and accuracy with visible sourcing and easy verification. If AI Mode pulls that off, search could feel more like collaboration than extraction. If not, users will find the limits and adjust their trust accordingly. Either way, this is not a cosmetic update — it is a structural turn in how discovery and answers are packaged.

Go to TECHTRENDSKE.co.ke for more tech and business news from the African continent.

Mark your calendars! The GreenShift Sustainability Forum is back in Nairobi this November. Join innovators, policymakers & sustainability leaders for a breakfast forum as we explore sustainable solutions shaping the continent’s future. Limited slots – Register now – here. Email info@techtrendsmedia.co.ke for partnership requests.

Follow us on WhatsApp, Telegram, Twitter, and Facebook, or subscribe to our weekly newsletter to ensure you don’t miss out on any future updates. Send tips to editorial@techtrendsmedia.co.ke